“Hard drives will soon be a thing of the past.”

“The data centre of the future is all-flash.”

These predictions, while perennial, have not aged well.

Without question, flash storage is well-suited to support applications that require high performance and speed. And flash revenue is growing, as is all-flash array (AFA) revenue. But this is not happening at the expense of hard drives.

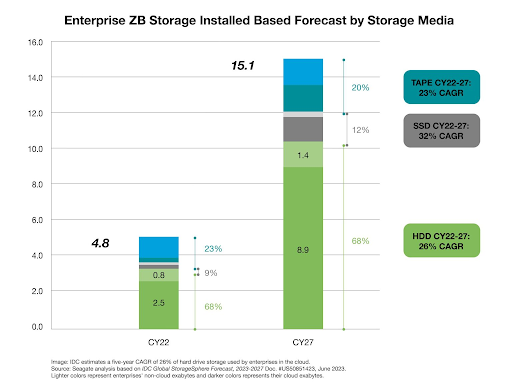

We are living in an era where the ubiquity of the cloud and the emergence of AI use cases have driven up the value of massive datasets. Hard drives, which today store by far the majority of the world’s exabytes (EB), are more indispensable to data centre operators than ever. Industry analysts expect hard drives to be the primary beneficiary of continued EB growth, especially in enterprise and large cloud data centres, where the vast majority of the world’s data resides.

Let’s take a closer look at three common myths about hard drives and solid-state drives (SSDs) and the reasons why both technologies will remain central to data storage architectures.

Myth 1: SSD pricing will soon match hard drive pricing.

Truth: SSD and hard drive pricing will not converge in the next decade.

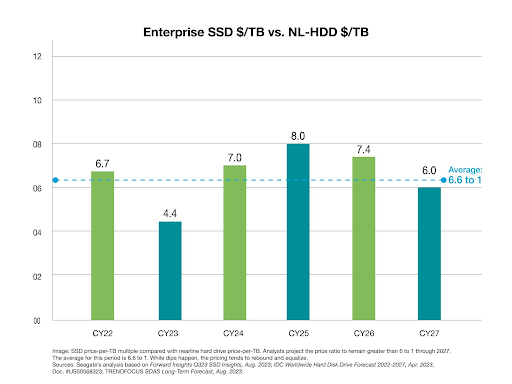

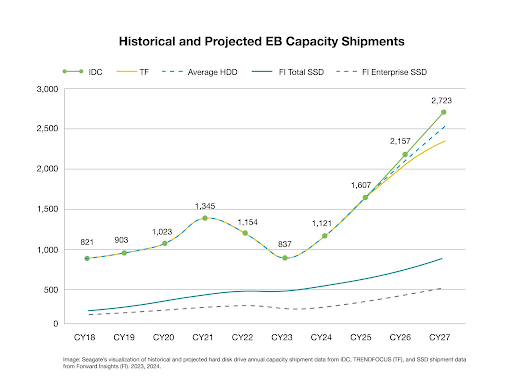

Hard drives hold a firm cost-per-terabyte (TB) advantage over SSDs, which positions them as the cornerstone of data centre storage infrastructure. Our analysis of research by IDC, TRENDFOCUS, and Forward Insights confirms that hard drives will remain the most cost-effective option for most enterprise tasks. The price-per-TB difference between enterprise SSDs and enterprise hard drives is projected to remain at or above a 6-to-1 premium through at least 2027.

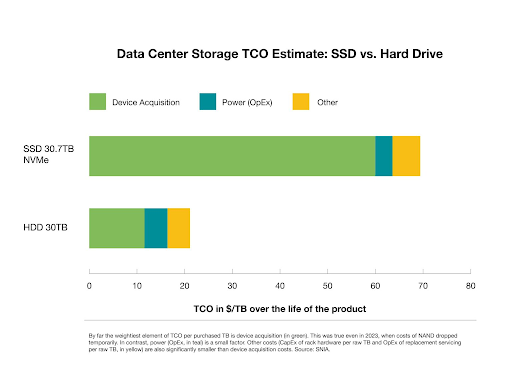

This differential is particularly evident in the data centre, where device acquisition cost is the dominant component in total cost of ownership (TCO). Taking all storage system costs into consideration, a far superior TCO is rendered by hard-drive-based systems on a per-TB basis.

Myth 2: The NAND supply can ramp up to replace all hard drive capacity.

Truth: Replacing hard drives with NAND would require untenable capital expenditure.

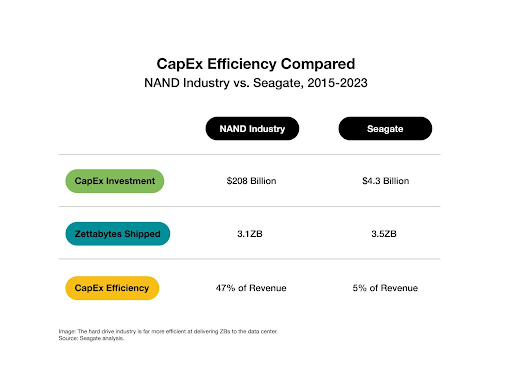

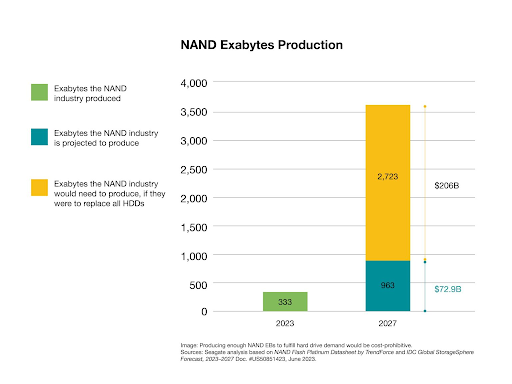

The notion that the NAND industry could rapidly increase its supply to replace all hard drive capacity isn’t just optimistic—such an attempt would lead to immense financial strain.

According to a report from industry analyst Yole Intelligence, the entire NAND industry shipped 3.1 zettabytes (ZB) from 2015 to 2023, while having to invest a staggering $208 billion in capital expenditure (CapEx)—approximately 47% of their combined revenue.

In contrast, the hard drive industry addresses the vast majority—almost 90%—of large-scale data centre storage needs in a highly capital-efficient manner. Simply put, the hard drive industry is far more efficient at delivering ZBs to the data centre.

Could the flash industry fully replace the hard drive industry’s capacity output by 2028?

The Yole Intelligence report indicates that from 2025 to 2027, the NAND industry will invest about $73 billion, which is estimated to yield 963 EB of output for enterprise SSDs and other products. This translates to an investment of about $76 per TB of flash storage output. Applying that same capital price per bit, it would require a staggering $206 billion in additional investment to support the 2.7 ZB of hard drive capacity forecast to ship in 2027. In total, that’s nearly $279 billion of investment for a total addressable market of approximately $25 billion—a potential 10-to-1 loss. This level of investment is unlikely for an industry facing uncertain returns.

Myth 3: Only all-flash arrays can meet modern performance requirements.

Truth: Optimal enterprise storage architecture mixes media types to balance cost, capacity, and performance.

Proponents of all-flash advise enterprises to “simplify” by going all-in on flash for high performance, lest they be unable to keep pace with modern workloads. This zero-sum logic fails for several reasons.

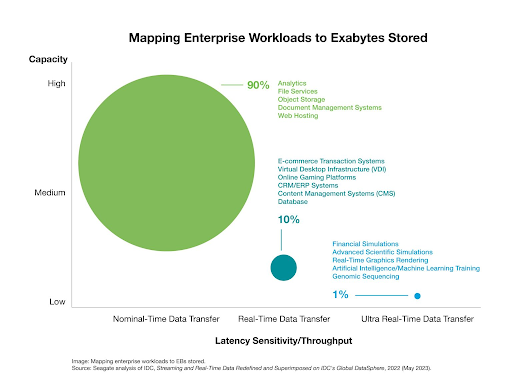

First, most of the world’s data resides in the cloud and large data centres. There, only a small percentage of the workload requires the highest level of performance. This is why, according to IDC, hard drives have amounted to almost 90% of the storage installed base in cloud service providers and hyperscale data centres over the last five years. In some cases, hybrid storage systems that mix media perform as well as or faster than all-flash systems.

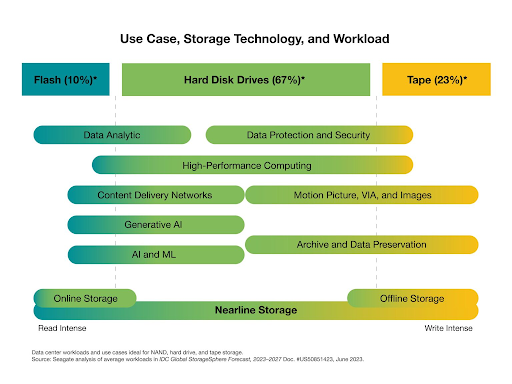

Second, TCO considerations are key. Optimal TCO is achieved by aligning the most cost-effective media—hard drive, flash, or tape—to the workload requirement. Hard drives and hybrid arrays are a great fit for most enterprise and cloud storage use cases. While flash storage excels in read-intensive scenarios, its endurance diminishes with increased write activity. Technologies like triple-level cell (TLC) and quad-level cell (QLC) flash can handle data-heavy workloads, but the economic rationale weakens for larger datasets or long-term retention. In these cases, hard drives, with their growing areal density, offer a more cost-effective solution.

Third, the claim that using an AFA is “simpler” than adopting a tiered architecture is often a solution in search of a problem. Many hybrid storage systems employ well-proven software-defined architectures that seamlessly integrate diverse media types. In scale-out cloud data centre architectures, file systems or software-defined storage manage workloads across locations and regions. AFAs and SSDs are a great fit for high-performance, read-intensive workloads, but it’s a mistake to extrapolate from niche use cases to the mass market and hyperscale, where AFAs are an unnecessarily expensive way to do what hard drives already deliver at a much lower TCO.

The Hybrid Reality

The data bears this out. Seagate’s analysis of industry forecasts predicts a nearly 250% increase in exabyte capacity shipped on hard drives by 2028. Hard drives, indeed, are here to stay—in synergy with flash storage.

The smartest data architectures are not about ideology but about economics and efficiency. For the foreseeable future, that means leveraging the right storage medium for the right job.