Microsoft has announced that its Azure ND GB300 v6 virtual machine achieved an inference speed of 1.1 million tokens per second on Meta’s Llama2 70 B model.

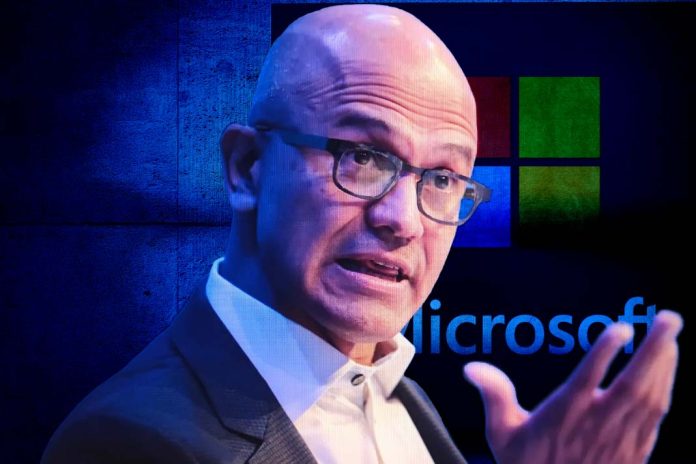

CEO Satya Nadella took to X, calling it “An industry record made possible by our longstanding co-innovation with NVIDIA and expertise in running AI at production scale.”

The Azure ND GB300 is a virtual machine (VM) powered by NVIDIA’s Blackwell Ultra GPUs — specifically the NVIDIA GB300 NVL72 system.

This contains 72 NVIDIA Blackwell Ultra GPUs and 36 NVIDIA Grace CPUs in a single rack-scale configuration.

The VM is optimised for inference workloads, featuring 50% more GPU memory and a 16% higher TDP (Thermal Design Power).

To simulate the performance gains, Microsoft ran the Llama2 70B (in FP4 precision) from MLPerf Inference v5.1 on each of the 18 ND GB300 v6 virtual machines on one NVIDIA GB300 NVL72 domain.

This used the NVIDIA TensorRT-LLM as the inference engine.

“One NVL72 rack of Azure ND GB300 v6 achieved an aggregated 1,100,000 tokens/s,” said Microsoft.

“This is a new record in AI inference, beating our own previous record of 865,000 tokens/s on one NVIDIA GB200 NVL72 rack with the ND GB200 v6 VMs.”

Since the system contains 72 Blackwell Ultra GPUs, the performance roughly translates to ~15,200 tokens/sec/GPU.

Microsoft provided a detailed breakdown of this simulation, along with all the log files and thorough results.

The performance was verified by Signal65, an independent performance-validation and benchmarking firm.

“This milestone is significant not just for breaking the one-million-token-per-second barrier and being an industry-first, but for doing so on a platform architected to meet the dynamic use and data governance needs of modern enterprises,” said Russ Fellows, VP of Labs at Signal65 in a blog post.

Signal65 also added that he Azure ND GB300 delivers a 27% inference performance improvement over the previous NVIDIA GB200 generation for only a 17% increase in its power specification.

“Compared to the NVIDIA H100 generation, GB300 offers nearly a 10x increase for inference performance at a nearly 2.5x power efficiency gain when measured at rack level,” the company added.

ALSO READ: Data Act Unlocks the Physical World: Fintech’s Race to Monetise IoT Begins